Intelligent Human-Robot Collaboration

Sponsors: National Science Foundation

Description

Workplaces with intelligent human-robot collaboration are crucial to high productivity and flexibility for many modern manufacturing companies. Intelligent, reliable, and safe communication and collaboration between humans and robots on the factory floor remains a major challenge, with a range of scientific and technical issues to be solved in the areas of sensing, cognition, prediction and control. The research objective of this project is to develop, build and validate an intelligent human-robot collaboration framework that enables pervasive sensing, customized cognition, real-time prediction, and intelligent control, which ensures operational safety and production efficiency for implementation on the factory floor.

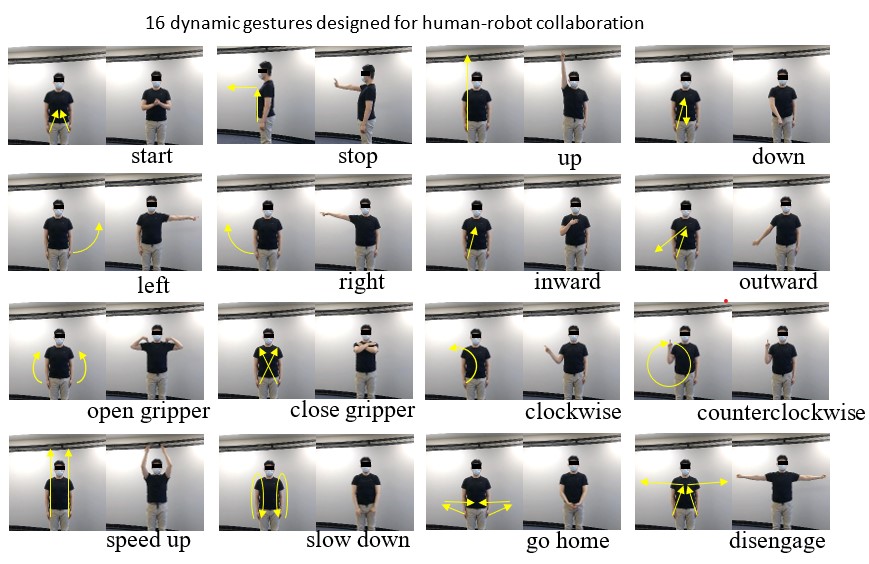

Some of our research results include: 1) dynamic gesture design and feature extraction using the Motion History Image method, 2) multi-view gesture data collection of multiple human subjects using RGB cameras, 3) dynamic gesture recognition for human-robot collaboration using convolutional neural networks, 4) action completeness modeling with background-conscious networks for weakly-supervised temporal action localization, 5) action recognition of human workers by discriminative feature pooling and video segment attention model, and 6) construction of individualized convolutional neural networks for skeletal data-based action recognition.